While adjusting my horn patch in EastWest Play, I noticed that the CPU spiked up to 80% at one point. I know that’s single-core, and while I’m not super worried about it (I’m working with a Ryzen 7 3700X), I would like to avoid it. Initially, I thought it was the mic placements I had on, but then I noticed that the other instruments were triggering despite being muted. (A duh moment, but it didn’t cross my mind before.)

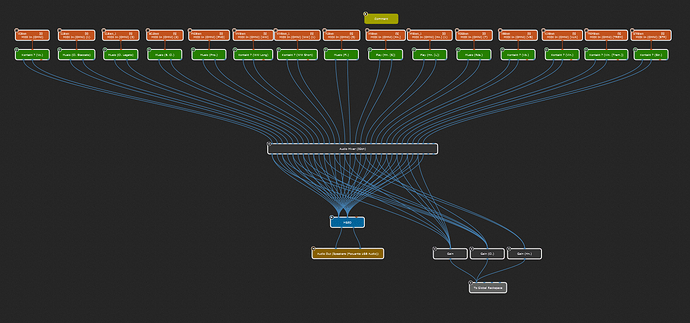

The rackspace I’m using has 15 instruments open, so I can use variations and just mute when I need to. I had the thought: instead of muting, what about BlockNoteOn? If it doesn’t send MIDI information, the instrument won’t get triggered, and theoretically, that 80% spike shouldn’t happen, right? Out of curiosity, I asked ChatGPT, and this was its response:

[…] it’s worth noting that blocking NoteOn messages can also have unintended consequences, such as preventing certain parts of a MIDI sequence from playing correctly. Additionally, the amount of CPU saved by blocking NoteOn messages may be negligible in some cases, depending on the specific instrument and the number and frequency of incoming MIDI messages.

For the instruments that aren’t being used, I’m not worried about potential silence or lack of note-overlap. What do y’all think? Is there a reason why BlockNoteOn isn’t recommended in this use case?