Hello guys, I have a question about how Gig performer calculates the percentage of CPU usage in the software.

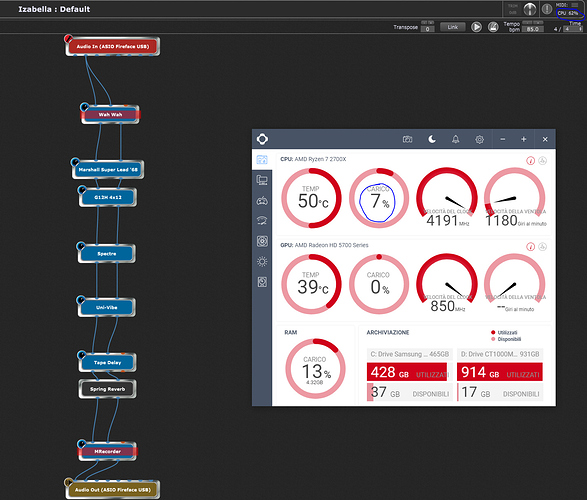

I have a Ryzen 2700x CPU and you can see in the image here that if I have a 62% CPU usage in Gig performer, but in general the CPU is only using something like 8%, so that’s my question!

Is there a way to “extend” the capability of GP to use more CPU? There’s a reason why a vst host shouldn’t use all the CPU?

Gig Performer’s CPU meter is measuring audio processing cycles only, not the overall CPU usage. Is Gig Performer glitching or otherwise not working properly on your system?

I think it’s working properly, I thought that the “quantity” of plugins you put in the chain would depend on the CPU.

Let’s say I have a rackspace with the CPU meter above 55/60%, when I switch to this rackspace (from another rackspace using a MIDI controller for example) it does some gliches, so I have to remove some plugins or some oversampling. It’s because, while changing from another rackspace, the meter reaches 100% for one second or two.

When I do that, I see that the CPU is really far from overload, that’s why I’m asking why this happens.

What sampling rate/buffer size are you using?

It’s not the quantity – some plugins are way more intensive than others (sometimes way more than they ought to be, IMO). So you could have a rackspace with 30 plugins and everything is gorgeous and another rackspace with 2 plugins and have grief if one of them uses an absurd amount of CPU cycles. Doesn’t happen too often but it can happen if the plugin developer didn’t do a good job managing CPU cycles in the audio processing section of his/her plugin.

But as the overall used cycles can‘t be less than the ones used for audio processing, how can the audio load be less than the overall load?

His system is probably giving the average of multiple cores.

Suppose for example you have 8 cores and you’re running some intensive app on one of them (e.g. it’s using 80% of one core)

The “average” overall CPU usage will be 10% . How useful is knowing that number?

So the GP CPU meter shows the utilization of the main audio thread where 100% equals full utilization of a single core?

While we‘re talking about useful metrics: What about the quotient time to fill up the buffer / buffer length (as in time, not samples)?

I think that would be very useful

EDIT: Just realized that I heard about that idea in an older post from the Cantabile blog.

I start wondering what the heck people are doing with Gig Performer at this point…

Me? I run it, insert a bunch of plugins, connect them up to my controllers, configure songs etc and play music

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Hmm, I‘m wondering if this is something that Windows users worry more about in general.  I‘m seeing a lot more troubles with glitches and overall audio performance on Windows than I did when using MacBooks. I don‘t have to worry about performance on stability and performance on stage with Windows either but I’m preparation I have to worry much more about this stuff.

I‘m seeing a lot more troubles with glitches and overall audio performance on Windows than I did when using MacBooks. I don‘t have to worry about performance on stability and performance on stage with Windows either but I’m preparation I have to worry much more about this stuff.

My suspicion is that macOS audio processing is just a bit more efficient. Sometime next year, I’ll buy a new laptop - maybe I‘ll be able to do some side-by-side comparison to check.

Sure - you have to remember that Apple built in support for real-time audio and MIDI processing a long time ago. That’s why there are preferences where you can configure your MIDI devices, and do things like aggregate multiple audio interfaces into a single “virtual” interface that your DAW or Gig Performer can see.

OS X itself is built on top of BSD Unix along with the real-time MACH kernel originally developed at CMU.

In the Windows world, real-time audio and MIDI has always been something that got bolted on, hence third party stuff like ASIO4ALL and third party virtual MIDI drivers, etc.

I’m considering the conversion into the world of fewer glitches. My Windows unit is really starting to irk me.

If u set up your windows machine right you wont have any audio or cpu problems and you will get it for a lot less coin. What are u using?

You’re right…there’s probably more I can pay attention to in the setup. I use an ASUS G75VW i7-2.30GHz. I’ll have it working perfectly at home…then get to a gig sometimes and it won’t work the same. The main problem I’m having right now is with my iLok for Pro Tools. For some reason, lately, when I plug in the dongle I get the Blue Screen of Death. I’ve tried everything. It looks like I’ll have to wipe the disk clean and re-install everything. I know its still cheaper than a Mac…it just time consuming tracking down all my codes, etc. for all my apps and plugins.

Im not sure what is up with your ilok and protools i use an ilok for a few of my plugins and have no problem. Except i transfer the license to my hardware from the dongle. You could try this and see if it helps. Pro tools is known to be buggy with windows but should be getting better as more and more producers are moving from mac to windows these days.

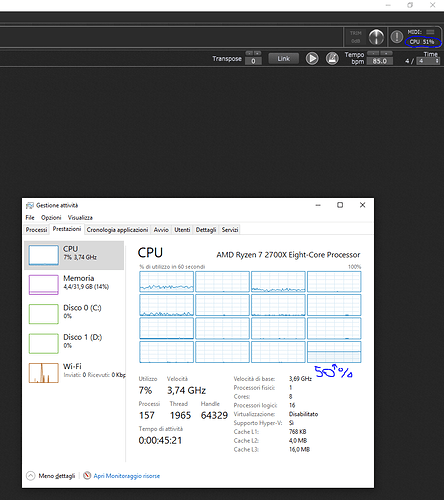

As you can see in this image (sorry for Italian language but you can see…) one of the 16 cores of my CPU is loading 50%, like Gig performer displays. The answer could be that Gig performer only uses one core, so Intel CPU might be the best choice because they have faster clock speed (i9 9900k can reach 5Ghz).

We all do but I’m a guitarist and all the sound comes from plugins. I have a plugin for amp simulation, another one for chorus, another one for delay etc… That’s because we try to get the best sound out of our gear and most of times oversampling and adding a plugin for a single FX allows you to use the best stuff you have.

I could use Bias FX for all that stuff, but you won’t have the same quality as Fabfilter or Meldaproduction stuff, for example.

The question now might be: is there really a way to distribute CPU load in different cores? The answer might be the multi instance support. I’ll do some experiment to see how the multiple istances distribute their “weight” in different cores. I’m discovering that now!

Thank you Simon. I have a question: in the thread above you said “Move some independent paths to another GP instance which is synced to the first”. How can I sync two istances?

Is there a way to divide a signal chain in two istances (the first one for amp simulation and IR, for example) and put all the FX in another istance?

GP does not provide any special tools for that, you‘ll have to use tools from the underlying audio systems. On macOS, CoreAudio gives you loopback and aggregate devices for free, on Windows there are third party tools that provide the loopback of audio and MIDI (alternative).