Many people don’t have a good grasp of how RAM, CPU - computer “power”, and clock speed are interrelated.

In the simplest terms, the core count (CPU power) is like the number of workers you have, and clock speed is how fast each worker operates. RAM is the amount of storage space for the “work” that needs to be processed.

A core is a single processing unit of the CPU. Modern CPUs can have anywhere from 2 to 64 cores (or even more in server setups). More cores mean the CPU can handle multiple tasks simultaneously, increasing its multitasking capabilities.

Measured in Gigahertz (GHz), clock speed indicates how many cycles a core can complete in a second. A higher clock speed means each core can process tasks faster.

RAM, or Random Access Memory, is a type of computer memory that stores data that is being actively used by the computer. It is a temporary storage space where the computer can quickly read and write data. Unlike permanent storage devices such as hard drives or SSDs, RAM provides much faster access to data, allowing the computer to retrieve and process information more rapidly.

As you can see from the above, any of these has the potential to act as a bottleneck for any of the others.

In general - and a vast oversimplification with numerous caveats - audio processing is linear and often is not great at taking advantage of multitasking capabilities - i.e. may not make the best use of multiple cores that a CPU may have, so in my experience faster clock speeds make a huge difference in running GP assuming you have a modern CPU. The amount of RAM becomes significant depending on how sample heavy your “instruments” (VSTs/AUs) are, and some VST/AUs - like the Korg Triton VST - are well known to be CPU/RAM intensive (for whatever reason it turns out that way) in comparison to other manufacturers/instruments.

As you stated, a recent, powerful CPU - MAC or Intel - with high clock speeds and lots of RAM, will usually play any complex arrangement/instruments that you might have. My two cents, for what it is worth, is that the most recent, powerful CPU and lots of RAM is a good investment and future proofs your setup for technology advances in the future for a number of years. When trying to find something that is just good enough, you will have to find someone who has a setup and use case approximating yours to determine what hardware will be good enough to work. The tradeoff is as others have said - to rearrange your setup to something that will run on the hardware you have or can afford. Good luck!

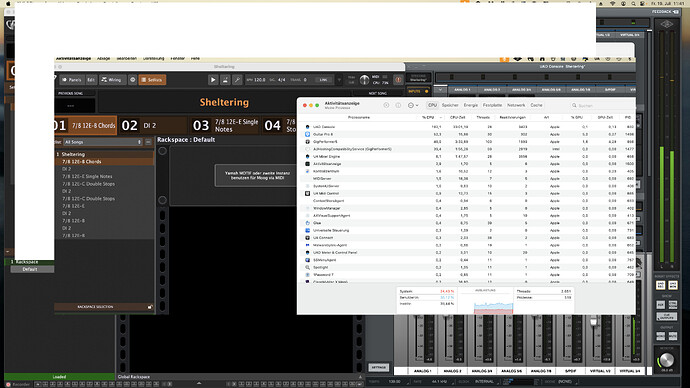

Bonus tidbits: I have an old (abt 10 years old) dual Zeon server with 128gb ram HP Z840 workstation that I can overload with GP and some large gig files, but my newer overclocked Intel i9 on a mini-ITX board with 64gm ram doesn’t even get past 15% CPU usage. Modern hardware, speed and lots of RAM rule the day in my world.